Hey: wanna talk about ancient means of outwitting tyrannical leaders with me all summer? This morning I was doing a deep dive into Kehinde Wiley’s Judith and Holofernes as prep for one of the sessions, and talk about a TOPIC. (Click that link and have your brain melted. If you’re in NC, go visit the real thing at the NCMA and have an otherworldly experience.) Wednesdays at noon EST, starting July 9. Register here.

This week, a federal judge ruled that AI company Anthropic did not break the law by training its chatbot, Claude, on millions of copyrighted books. It’s a huge (and dangerous) precedent, because it was the first legal case challenging the current widespread practices of theft all the big AI companies are using.

You might also have seen that the current massive federal spending bill currently includes a ten-year moratorium on any state or federal regulation of AI. (The Senate parliamentarian has requested, among her many other kiboshes, that this portion of the bill be re-written, so its fate is currently in question.)

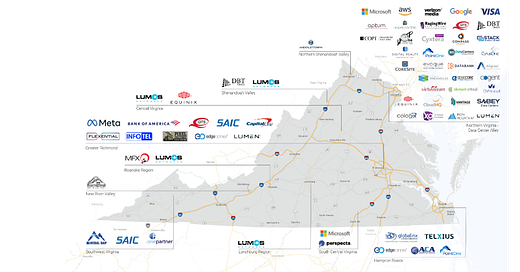

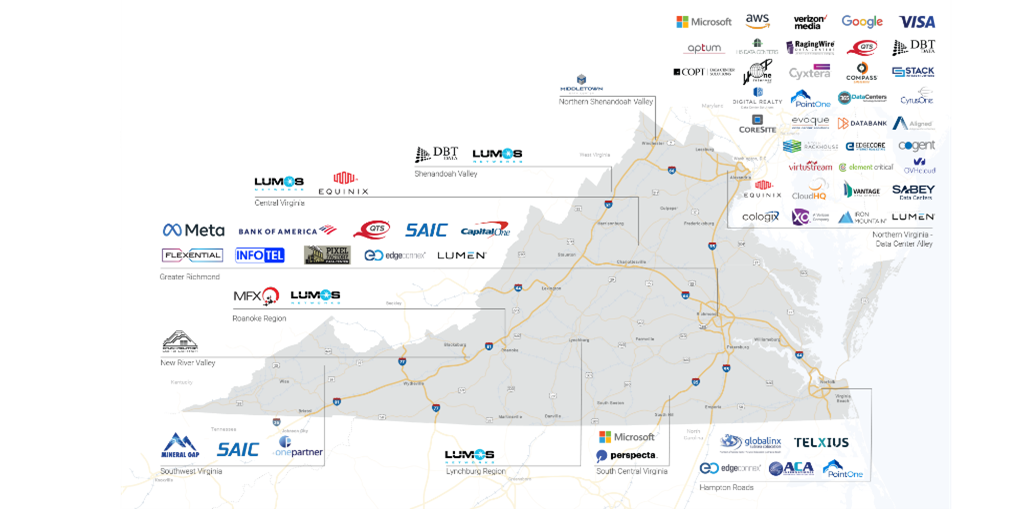

Also this week, neighboring Botetourt County announced with much fanfare the purchase of a giant plot of land for Google’s latest data center, and a church I love is starting the process of deciding whether or not to sell their property to a data center developer in one of the counties vying for the title of Data Center Capital of the World. These questions about technology are not distant or irrelevant; they’re here.

I am the farthest thing from a tech expert, but I do have a tiny bit of experience with AI. And that tiny bit of experience led to some Very Strong Opinions. Namely: AI as it is currently being developed and deployed is both very dumb and very dangerous.

One of the gigs I’ve had in this weird vocational season was training AI. Not being trained in how to use artificial intelligence, but training the bots and algorithms themselves to act more…human-ish.

I got recruited for that job because my Linked In profile said I was a writer, and the bots can’t write. The initial pay ($40/hour, plus regular generous bonuses) was enticing, and I was very curious. Plus, I was (am still) casting around for what the heck I’m supposed to be doing with my life. I learned quite a few things doing that job.

First: the companies building AI are not well-run, and they do not treat their employees well. In the months that I did this gig, my pay was docked, the scope and timeframe of the work changed multiple times, and I was subjected to the whims of a byzantine machine anytime I had a question or complaint. I could rarely find a human being with whom to interact, and when I did, that human being was both younger and less-informed than I was. Truly, the worst client I’ve ever worked with.

Second: AI is extraordinarily dumb. You already know this. The chatbots don’t understand intuition. They cannot read a room. AI couldn’t identify humor if humor walked up and punched it in the face. Similarly, and much more worrisome, bots don’t have ethics and they are incapable of situational awareness. Chatbots and AI systems do their work based on the rules some human sets for them, but humans do our work - whether we recognize it or not - much more by reading the room than we do by following the rules. This job gave me a newfound appreciation for the power and mystery of human intelligence.

Third: Humans are just always going to be better than bots at anything other than raw number crunching. AI is great for sorting and categorizing things - mostly. But when it comes to creating, imagining, writing, storytelling, innovating, existing as full-blown living beings in an ever-changing world, relating to all manner of other full-blown living beings navigating the same slippery landscapes, humans beat bots every. single. time. Every time! EVERY TIME. I do not want to read your ChatGPT-junkified email or proposal. I want to read YOUR words, with YOUR grammatical mistakes and YOUR charming turns-of-phrase. I don’t want to interact with any more automated processes or messages than I’m already forced to; I want to interact with YOU.

I’ve got plenty more anti-technology screed in me to be written, but you’d be better off reading why Wendell Berry refused to buy a computer. Here are his rules for new technology, from 1987:

The new tool should be cheaper than the one it replaces.

It should be at least as small in scale as the one it replaces.

It should do work that is clearly and demonstrably better than the one it replaces.

It should use less energy than the one it replaces. (!!!!!!!!!!!)

If possible, it should use some form of solar energy, such as that of the body. (!!!!!!!!!)

It should be repairable by a person of ordinary intelligence, provided that he or she has the necessary tools.

It should be purchasable and repairable as near to home as possible.

It should come from a small, privately owned shop or store that will take it back for maintenance and repair.

It should not replace or disrupt anything good that already exists, and this includes family and community relationships. (!!!!!!!!!!!!!!!)

And, look, Berry’s argument hinges on the fact that his very dutiful wife typed up everything he ever wrote in longhand and he believed that this was part and parcel of his imagined Good Life and I do not have a wife and will never be a dutiful typist of one, either. His argument still stands.

ChatGPT is the least of our AI worries, but I still refuse to use it. How will I be able to rant against the monstrosities of data centers gobbling up the places I love and the energy that is keeping us from broiling to death if I willingly participate in the same kind of thieving, cheating, disruptive stuff?

Despite having a job where I frequently interact with and “design” ai, I completely agree with you. It’s application for data ingestion and manipulation (ie, “math”) is valuable, but there’s already significant research showing how frequent “creative” use (can we even call it that?!) actually kills the mental pathways we would otherwise use to create ourselves. Also, the amount of power (and therefore water) it uses, especially for tasks like image generation, is obscene.

The Christian ethics question this year for the Episcopal Church’s General Ordination Exam was on ai. We had to cite a theologian to develop a Christian ethical framework, and then apply that framework to deciding how to use ai, citing both positive and negative perspectives